Press release

Who's Responsible When a Chatbot Gets It Wrong?

As generative artificial intelligence spreads across health, wellness, and behavioral health settings, regulators and major professional groups are drawing a sharper line: chatbots can support care, but they should not be treated as psychotherapy. That warning is now colliding with a practical question that clinics, app makers, insurers, and attorneys all keep asking.When a chatbot gets it wrong, who owns the harm?

Recent public guidance from the American Psychological Association (APA) cautions that generative AI chatbots and AI-powered wellness apps lack sufficient evidence and oversight to safely function as mental health treatment, urging people not to rely on them for psychotherapy or psychological care. Separately, medical and regulatory conversations are moving toward risk-based expectations for AI-enabled digital health tools, with more attention on labeling, monitoring, and real-world safety.

This puts treatment centers and digital health teams in a tight spot. You want to help people between sessions. You want to answer the late-night "what do i do right now" messages. You also do not want a tool that looks like a clinician, talks like a clinician, and then leaves you holding the bag when it gives unsafe guidance.

A warning label is not a care planThe "therapy vibe" problem

Here's the thing. A lot of chatbots sound calm, confident, and personal. That tone can feel like therapy, even when the product says it is not. Professional guidance is getting more blunt about this mismatch, especially for people in distress or young people.

Regulators in the UK are also telling the public to be careful with mental health apps and digital tools, including advice aimed at people who use or recommend them. When public agencies start publishing "how to use this safely" guidance, it is usually a sign they are seeing real confusion and real risk.

The standard-of-care debate is getting louder

In clinical settings, "standard of care" is not a slogan. It is the level of reasonable care expected in similar circumstances. As more organizations plug chatbots into intake flows, aftercare, and patient messaging, the question becomes simple and uncomfortable.

If you offer a chatbot inside a treatment journey, do you now have clinical responsibility for what it says?

That debate is not theoretical anymore. Industry policy groups are emphasizing transparency and accountability in health care AI, including the idea that responsibility should sit with the parties best positioned to understand and reduce AI risk.

Liability does not disappear, it just moves aroundWho can be pulled in when things go wrong

When harm happens, liability often spreads across multiple layers, not just one "bad answer." Depending on the facts, legal theories can involve:

*

Product liability or negligence claims tied to design, testing, warnings, or foreseeable misuse

*

Clinical malpractice theories, if the chatbot functioned like care delivery inside a clinical relationship

*

Corporate negligence and supervision issues if humans fail to monitor, correct, or escalate risks

*

Consumer protection concerns if marketing implies therapy or clinical outcomes without support

Public reporting and enforcement attention around how AI "support" is described, especially for minors, is increasing.

This is also where the "wellness" label matters. In the U.S., regulators have long drawn lines between low-risk wellness tools and tools that claim to diagnose, treat, or mitigate disease. That boundary is still shifting, especially as AI features become more powerful and more persuasive.

The duty to warn does not fit neatly into a chatbot box

Clinicians and facilities know the uncomfortable phrase: duty to warn. If a person presents a credible threat to themselves or others, you do not shrug and point to the terms of service.

A chatbot cannot carry that duty by itself. It can only trigger a workflow.

So if a chatbot is present in your care ecosystem, the safety question becomes operational: Do you have reliable detection, escalation, and human response? If not, a "we are not therapy" disclaimer will feel thin in the moment that matters.

In many programs, that safety line starts with the facility's human team and the way the tool is configured, monitored, and limited to specific tasks.

For example, some organizations position chatbots strictly as administrative support and practical nudges, while the clinical work stays with clinicians. People in treatment may still benefit from structured care options, including services at an Addiction Treatment Center [https://luminarecovery.com/] that can provide real assessment, real clinicians, and real crisis pathways when needed.

Informed consent needs to be more than a pop-upMake the tool's role painfully clear

If you are using a chatbot in any care-adjacent setting, your consent language needs to do a few things clearly, in plain words:

*

What it is (a support tool, not a clinician)

*

What it can do (reminders, coping prompts, scheduling help, basic education)

*

What it cannot do (diagnosis, individualized treatment plans, emergency response)

*

What to do in urgent situations (call a local emergency number, contact the on-call team, go to an ER)

*

How data is handled (what is stored, who can see it, how long it is kept)

Professional groups are urging more caution about relying on genAI tools for mental health treatment and emphasizing user safety, evidence, and oversight.

Consent is also about expectations, not just signatures

People often treat chatbots like a private diary with a helpful voice. That creates two problems.

First, over-trust. Users follow advice they should question.

Second, under-reporting. Users disclose risk to a bot and assume that "someone" will respond.

Your consent process should address both. And it should live in more than one place: onboarding, inside the chat interface, and in follow-up communications.

How treatment centers can use chatbots safely without playing clinicianKeep the chatbot in the "assist" lane

Used carefully, chatbots can reduce friction in the parts of care that frustrate people the most. The scheduling back-and-forth. The "where do I find that worksheet?" The reminders people genuinely want but forget to set.

Safer, lower-risk use cases include:

*

Appointment reminders and check-in prompts

*

"Coping menu" suggestions that point to known, approved skills

*

Medication reminders that route questions to staff

*

Administrative Q&A (hours, locations, what to bring, how to reschedule)

*

Educational content that is clearly labeled and sourced

This matters for programs serving people with complex needs. Someone seeking Treatment for Mental Illness [https://mentalhealthpeak.com/] may need fast access to human support and clinically appropriate care, not a chatbot improvising a response to a high-stakes situation.

Build escalation like you mean it

A safe design assumes the chatbot will see messages that sound like crisis, self-harm, violence, abuse, relapse risk, or medical danger. Your system should do three things fast:

*

Detect high-risk phrases and patterns

*

Escalate to a human workflow with clear ownership

*

Document what happened and what the response was

The FDA's digital health discussions around AI-enabled tools increasingly emphasize life-cycle thinking: labeling, monitoring, and real-world performance, not just a one-time launch decision. Even if your chatbot is not a regulated medical device, the safety logic still applies.

In practice, escalation can look like a warm handoff message, a click-to-call feature, or an automatic alert to an on-call clinician, depending on your program and jurisdiction. But it has to be tested. Not assumed.

Documentation, audit trails, and the "show your work" momentIf it is not logged, it did not happen

When a chatbot is part of a care pathway, you should assume you will eventually need to answer questions like:

*

What did the chatbot say, exactly, and when?

*

What model or version produced that output?

*

What safety filters were active?

*

What did the user see as warnings or instructions?

*

Did a human get alerted? How fast? What action was taken?

Audit trails are not fun, but they are your best friend when something goes sideways. They also help you improve the system. You can spot failure modes like repeated confusion about withdrawal symptoms, unsafe "taper" advice, or false reassurance during a crisis.

Avoid the "shadow chart" problem

If chatbot interactions sit outside the clinical record, you can end up with a split reality: the patient thinks they disclosed something important, while the clinician never saw it. That is a real operational risk, and it can turn into a legal one.

Organizations are increasingly expected to be transparent with both patients and clinicians about the use of AI in care settings. Transparency also means training staff so they know how the chatbot works, where it fails, and what to do when it triggers an alert.

For facilities supporting substance use recovery, clear pathways are critical. Someone looking for a rehab in Massachusetts [https://springhillrecovery.com/] may use a chatbot late at night while cravings spike. Your system should be built for that reality, with escalation and human support options that do not require perfect user behavior.

What responsible use looks like this yearA practical checklist you can act on

Organizations that want the benefits of chat support without the "accidental clinician" risk are moving toward a few common moves:

*

Narrow scope: lock the chatbot into specific functions, not open-ended therapy conversations

*

Plain-language consent: repeat it, not just once, and make it easy to understand

*

Crisis routing: escalation to humans with tested response times

*

Human oversight: regular review of transcripts, failure patterns, and user complaints

*

Version control: log model changes and re-test after updates

*

Marketing discipline: do not imply therapy, diagnosis, or outcomes you cannot prove

The point is care, not cleverness

People want support that works when they are tired, stressed, or scared. That is when a chatbot can feel comforting and also when it can do the most damage if it gets it wrong.

If you are running a program, you can treat chat as a helpful layer, like a front desk that never sleeps, while keeping clinical judgment where it belongs: with trained humans. And if you are building these tools, you can stop pretending that disclaimers alone are protection.

The responsibility question is not going away. It is getting sharper.

As digital mental health tools expand, public agencies are also urging people to use them carefully and to understand what they can and cannot do. For anyone offering chatbot support as part of addiction and recovery services, the safest path is clear boundaries, fast escalation, and real documentation. Someone should always be able to reach humans when risk rises, not just a chat window. That is where programs like Wisconsin Drug Rehab [https://wisconsinrecoveryinstitute.com/] fit into the bigger picture: care that is accountable, supervised, and real.

Media Contact

Company Name: luminarecovery

Email:Send Email [https://www.abnewswire.com/email_contact_us.php?pr=whos-responsible-when-a-chatbot-gets-it-wrong]

Country: United States

Website: https://luminarecovery.com/

Legal Disclaimer: Information contained on this page is provided by an independent third-party content provider. ABNewswire makes no warranties or responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you are affiliated with this article or have any complaints or copyright issues related to this article and would like it to be removed, please contact retract@swscontact.com

This release was published on openPR.

Permanent link to this press release:

Copy

Please set a link in the press area of your homepage to this press release on openPR. openPR disclaims liability for any content contained in this release.

You can edit or delete your press release Who's Responsible When a Chatbot Gets It Wrong? here

News-ID: 4383254 • Views: …

More Releases from ABNewswire

Beard Oil Market Size to Reach USD 1.97 Billion by 2030 as Premium Grooming Beco …

Mordor Intelligence has released a comprehensive assessment of the beard oil market, highlighting how premiumization, digital commerce, and evolving male grooming norms are reshaping demand worldwide.

Beard Oil Market Size and Growth Outlook

According to Mordor Intelligence, the global beard oil market size [https://www.mordorintelligence.com/industry-reports/beard-oil-market?utm_source=abnewswire] is valued at USD 1.42 billion in 2025 and is projected to reach USD 1.97 billion by 2030, expanding at a CAGR of 6.81% during the forecast period.…

Track and Trace solutions market size to Reach USD 5.93 Billion by 2031, Support …

Mordor Intelligence has published a new report on the track and trace solutions market, offering a comprehensive analysis of trends, growth drivers, and future projections.

Track and Trace Solutions Market Analysis

According to Mordor Intelligence, the track and trace solutions market size [https://www.mordorintelligence.com/industry-reports/track-and-trace-solutions-market?utm_source=abnewswire] is estimated at USD 3.83 billion in 2026 and is projected to reach USD 5.93 billion by 2031, reflecting consistent expansion over the forecast period. This growth is supported…

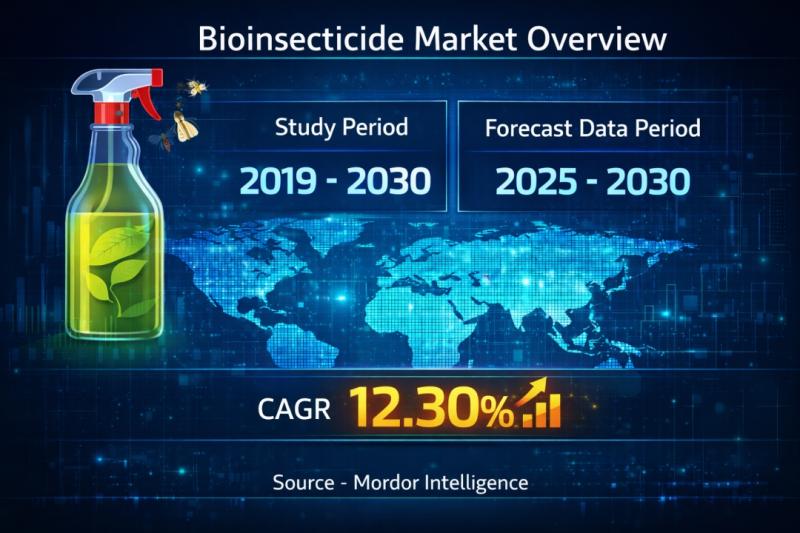

Bioinsecticide Market is expected to register a CAGR of 12.3% by 2030 - Mordor I …

Mordor Intelligence has published a new report on the bioinsecticide market, offering a comprehensive analysis of trends, growth drivers, and future projections.

Introduction: Growing Demand in the Bioinsecticide Market

According to Mordor Intelligence, the global bioinsecticide market [https://www.mordorintelligence.com/industry-reports/global-bioinsecticides-market-industry?utm_source=abnewswire] is expected to grow at a CAGR of 12.3% during the forecast period 2025 - 2030, driven by the rising demand for safer, eco-friendly pest control solutions. Fruits and vegetables represent the largest application…

Molded Case Circuit Breaker Market Size Shows USD 1.87 Billion Opportunity by 20 …

Mordor Intelligence has published a new report on the Molded Case Circuit Breaker Market, offering a comprehensive analysis of trends, growth drivers, and future projections.

Molded Case Circuit Breaker Market Overview

The molded case circuit breaker market [https://www.mordorintelligence.com/industry-reports/molded-case-circuit-breaker-market?utm_source=abnewswire] size is estimated at USD 1.36 billion in 2025 and is projected to reach USD 1.87 billion by 2030, registering a CAGR of 6.54% during the forecast period. In addition, expanding real estate projects…

More Releases for People

People Trust People More Than Brands - And That Gap Is Growing

Why Personal Recommendations Are Reshaping Business Growth Worldwide

FOR IMMEDIATE RELEASE

Consumers worldwide are placing greater trust in personal recommendations than in brands or advertising, reinforcing a growing shift in how purchasing decisions are made and how businesses must now earn credibility.

As audiences become more sceptical of advertising and increasingly overwhelmed by digital noise, trust is moving away from brand messaging and toward recommendations from people they know.

"People don't wake up trusting…

Find UK People Marks 9 Years of Trusted People Tracing Services

FOR IMMEDIATE RELEASE

Find UK People® Marks 9 Years of Trusted People Tracing Services in the UK

📍 Sussex, United Kingdom - 13 July 2025

Find UK People®, the trusted online tracing specialists, proudly celebrates its 9th year of providing fast, accurate, and fully compliant people tracing services across the United Kingdom.

Founded in 2016, the company has become a leading provider of address tracing services for legal professionals, landlords, debt recovery firms, and…

Helperschain: People Helping People

Helperschain some would say is a ponzi scheme, but we do not think we are. Helperschain is a membership scheme that believes in the ability of group financial home help. We assume that if all members who we call chainers hook their own chain to our existing long chain of chainers we are able to carry on growing and keep helping each other. Our slogans is " People helping People",…

Loans for people on benefits: Physically disabled people can meet their needs

Most of the UK citizens are surviving on benefits. This kind of survival occurs due to varied causes including physical ailment or deficiency, unemployment and any other prolonged sickness. Many lenders would refuse or avoid offering these sufficient loans to such people. At that stage of urgency, the people have to suffer a lot for the desired funds.

For their advantage and convenience, the loans for people on benefits are…

Gomez for the People, the People for Gomez

The local community members of East Northport joined forces to “rally in the streets”, in support of congressional candidate, John Gomez.

On Sunday October 24th, Justin Abrams, Executive director of Black Rose Productions, Inc. visited a rally set up by acting founder and director of the Conservation Society for Action, Mr. Stephen Flanagan.

The rally attracted a substantial amount of followers including war veterans, children, and local communitarians. The CSA…

People-Search-Find-USA.com, Surefire Way To Find People In USA For Free

People searches on the web continue to soar. Whether it's a friend trying to reconnect to a childhood companion or someone searching for a business colleague or a long lost lover, the number of people searches on the major search engines is staggering! Today you can run a people search and find people in the USA for free.

It is estimated that up to 30% of all search engine searches are…