Press release

Smart, Smooth, and Sometimes Dangerously Wrong: AI's Hidden Risks in Medicine

As millions of people and thousands of clinicians begin using general-purpose AI tools (such as ChatGPT, Grok, Gemini, and others) for medical questions and image interpretation, new case reports and peer-reviewed studies show these systems can confidently produce convincing but false medical information - in some cases directly misleading patients and contributing to harm."AI is already a powerful assistant. But multiple recent examples make one point painfully clear: when an AI sounds authoritative, it is not the same as being clinically correct," said Dr. Neel Navinkumar Patel, cardiovascular medicine fellow at the University of Tennessee, and researcher in AI and digital health. "Hospitals and regulators must insist on human-in-the-loop systems and clear labeling of what these models can and cannot safely do."

Key Real-World Examples (What Happened)

1) A patient hospitalized after following ChatGPT's "diet" advice A case published in Annals of Internal Medicine: Clinical Cases describes a 60-year-old man who, after consulting ChatGPT for dietary advice, replaced table salt (sodium chloride) with sodium bromide and developed bromide toxicity ("bromism") with paranoia, hallucinations, and hospitalization. The authors demonstrated that some prompts produced responses naming bromide as a chloride substitute without adequate medical warnings - an outcome that likely contributed to real harm. Why it matters: This is a documented, peer-reviewed instance where AI-derived advice was linked to direct patient harm, not just a hypothetical risk.

Eichenberger E, Nguyen H, McDonald R, et al. A Case of Bromism Influenced by Use of Artificial Intelligence. Ann Intern Med Clin Cases. 2025;4(8). doi:10.7326/aimcc.2024.1260.

2) Researchers show chatbots can generate polished medical misinformation and fake citations A study in Annals of Internal Medicine found that major LLMs (OpenAI's GPT family, Google's Gemini, xAI's Grok, and others) can be manipulated to produce authoritative-sounding false medical advice - even inventing scientific citations to support fabricated claims. Only one model, trained with stronger safety constraints, resisted this behavior. Why it matters: AI outputs can include fabricated references and polished reasoning that appear verified - making misinformation far more persuasive and dangerous.

Li CW, Gao X, Ghorbani A, et al. Assessing the System-Instruction Vulnerabilities of Large Language Models to Malicious Conversion Into Health Disinformation Chatbots. Ann Intern Med. Published online June 24, 2025. doi:10.7326/ANNALS-24-03933.

3) AI model invented a non-existent brain structure ("basilar ganglia") Google's Med-Gemini (a healthcare-oriented version of Gemini) produced the term "basilar ganglia" - a nonexistent structure combining two distinct anatomical regions. The error appeared in launch materials and a research preprint, flagged publicly by neurologists. Google later edited its post and called it a typo, but the incident became a prominent example of "hallucination" in medicine. Why it matters: When AI invents anatomy or diagnoses, clinicians may overlook errors (automation bias), or downstream systems could propagate those mistakes. (Source: The Verge: "Google's Med-Gemini Hallucinated a Nonexistent Brain Structure." 2024.)

4) Viral user posts and clinician tests show image-analysis failures (Grok, ChatGPT, Gemini) After public encouragement to upload X-rays and MRIs, users posted examples where Elon Musk's Grok flagged fractures or abnormalities - some celebrated as "AI diagnoses." Radiologists later testing Grok and other chatbots found inconsistent performance, false positives, and missed findings. Independent clinical evaluations concluded these tools are not reliable replacements for certified radiology workflows. Why it matters: Consumer anecdotes highlight potential, but clinical rollout must be evidence-based. (Source: STAT News: "AI Chatbots and Medical Imaging: Radiologists Warn of Misdiagnosis Risk." 2024.)

5) Studies show general LLMs perform poorly on diagnostic tasks (ECG/CXR) Peer-reviewed work testing multimodal LLMs on ECG and imaging interpretation shows major limitations. For example, JMIR studies evaluating ChatGPT-4V on ECG interpretation reported low accuracy in visually driven diagnoses, and other benchmarks showed perceptual failures (orientation, contrast, basic checks) unacceptable for clinical use. Why it matters: Clinicians should not treat off-the-shelf chatbots as medical-grade interpreters without regulatory clearance. ( JMIR Med Inform ; 2024.)

How These Errors Happen (Short Explainer)

* LLMs predict text, not truth. They are designed to generate statistically likely continuations of text, not verify accuracy - leading to fluent but false statements ("hallucinations"). (Reuters)

* Visual reasoning gaps. Even image-capable models may misread orientation or labeling because they weren't built for clinical imaging. ( arXiv.org )

* Prompt manipulation. Researchers showed that simple instruction changes can make general models output dangerous falsehoods.

Recommendations For Patients & the Public

* Treat chatbots as informational only - never for diagnosis, medication changes, or urgent care decisions.

* Save chat logs and show them to your clinician - a confident AI diagnosis does not mean it's correct.

* Require human sign-off for all AI-generated diagnostic outputs - this is also supported by the American Medical Association.

* Validate models locally before use. Rely on FDA-cleared systems when available. (FDA guidance)

* Build policies defining when and how staff may use chatbots, and document AI involvement in patient records.

* Require clear labeling when LLMs are part of any medical workflow and mandate transparent performance metrics with post-market surveillance.

* Mandate adversarial testing to detect vulnerabilities that allow health disinformation or unsafe recommendations.

For Clinicians & Hospital LeadersFor Regulators & Industry

"Generative AI is already reshaping medicine but not yet in a way that guarantees patient safety when it comes to diagnosis," said Dr. Neel N. Patel. "These recent, documented failures show the cost of over-trusting fluent AI. The right path is responsible augmentation: transparent tools, rigorous validation, human sign-off, and stronger regulation so AI helps clinicians not mislead patients."

References

* Eichenberger E, Nguyen H, McDonald R, et al. A Case of Bromism Influenced by Use of Artificial Intelligence. Ann Intern Med Clin Cases. 2025;4(8). doi:10.7326/aimcc.2024.1260.

* Li CW, Gao X, Ghorbani A, et al. Assessing the System-Instruction Vulnerabilities of Large Language Models to Malicious Conversion Into Health Disinformation Chatbots. Ann Intern Med. Published online June 24, 2025. doi:10.7326/ANNALS-24-03933.

* The Verge. "Google's Med-Gemini Hallucinated a Nonexistent Brain Structure." 2024.

* STAT News. "AI Chatbots and Medical Imaging: Radiologists Warn of Misdiagnosis Risk." 2024.

* JMIR Med Inform. "Evaluation of GPT-4V on ECG Interpretation Tasks." 2024.

Media Contact

Neel N Patel, MD

Department of Cardiovascular Medicine

University of Tennessee Health Science Center at Nashville

St. Thomas Heart Institute / Ascension St. Thomas Hospital, Nashville, TN, USA

(332) 213-7902

neelnavinkumarpatel@gmail.com

Media Contact

Contact Person: Neel N Patel, MD

Email: Send Email [http://www.universalpressrelease.com/?pr=smart-smooth-and-sometimes-dangerously-wrong-ais-hidden-risks-in-medicine]

City: Nashville

State: TENNESSEE

Country: United States

Website: https://www.linkedin.com/in/neel-navinkumar-patel-md

Legal Disclaimer: Information contained on this page is provided by an independent third-party content provider. GetNews makes no warranties or responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you are affiliated with this article or have any complaints or copyright issues related to this article and would like it to be removed, please contact retract@swscontact.com

This release was published on openPR.

Permanent link to this press release:

Copy

Please set a link in the press area of your homepage to this press release on openPR. openPR disclaims liability for any content contained in this release.

You can edit or delete your press release Smart, Smooth, and Sometimes Dangerously Wrong: AI's Hidden Risks in Medicine here

News-ID: 4225976 • Views: …

More Releases from Getnews

Alex Wilcox Continues to Shape Customer-Focused Air Travel Through Leadership at …

Image: https://www.globalnewslines.com/uploads/2026/02/1771877935.jpg

Alex Wilcox

Co-Founder and CEO of JSX builds on decades of aviation experience to expand simplified regional flying model.

DALLAS - February 23, 2026 - Alex Wilcox, Co-Founder and CEO of JSX, continues to advance a streamlined approach to regional air travel, drawing on more than three decades of experience in commercial aviation and airline operations.

Alex Wilcox [https://www.newstrail.com/alex-wilcox-dallas-redefining-air-travel-with-purpose-and-innovation/] co-founded JetSuiteX, now known as JSX, with the goal of offering travelers a…

Jimenez Law Firm, P.A. Recognized as a Top-Rated Jacksonville Personal Injury La …

Image: https://www.globalnewslines.com/uploads/2026/02/1771874853.jpg

Jacksonville, FL - February 23, 2026 - Jimenez Law Firm, P.A. of Jacksonville [https://www.jimenez-lawfirm.com/jacksonville/], a respected legal practice serving Northeast Florida, continues to earn recognition as a top-rated personal injury lawyer dedicated to protecting the rights of accident victims and their families.

With a strong reputation built on client satisfaction, courtroom experience, and a commitment to justice, Jimenez Law Firm, P.A. has become a trusted name for individuals seeking compensation…

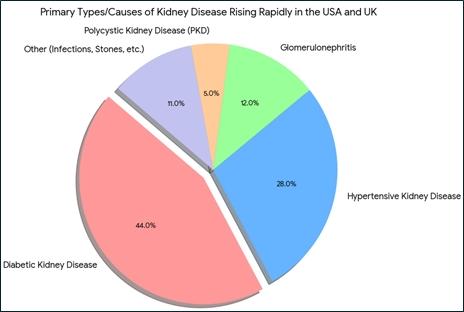

Chronic Kidney Disease Rising Rapidly in the USA and UK: Experts Point to Lifest …

Chronic Kidney Disease (CKD) is emerging as a major public health concern in developed countries such as the United States and the United Kingdom. Despite advanced healthcare infrastructure, the incidence of CKD stages continues to rise, largely driven by modern food habits, sedentary lifestyles, and metabolic conditions like diabetes and high blood pressure.

Medical studies consistently identify type 2 diabetes and hypertension as the leading causes of kidney damage. High sodium…

MSP News Global & Dame Marie Diamond Unveil Expert Secrets to Magnetizing Abunda …

Expert Secrets for the 2026 Fire Horse Year. In this landmark collaboration by MSP News Global, world-renowned Feng Shui Master Dame Marie Diamond joins Mark Stephen Pooler and 20 other global experts.

Image: https://authoritypresswire.com/wp-content/uploads/2026/02/1000059118.jpg

MSP News Global proudly presents a comprehensive feature, "Expert Secrets: How to Magnetize Abundance & Prosperity in 2026: The Fire Horse Year," led by the esteemed Feng Shui master, Dame Marie Diamond. This in-depth article, published on the…

More Releases for Med

Elite Med Spa Partners with Salterra Marketing for Med Spa Growth in 2026

Elite Med Spa announces a 2026 partnership with Salterra Marketing to accelerate Med Spa Growth through local SEO, paid media, conversion optimization, and results tracking.

Elite Med Spa, a Scottsdale-based medical spa known for advanced aesthetics and body contouring, announced today a strategic partnership with Salterra Marketing to drive Med Spa Growth in 2026 through performance-focused digital strategy, local visibility, and conversion optimization.

The collaboration is designed to strengthen Elite Med Spa's…

10x Med Spa Marketing Launches to Help Med Spas Dominate Local Search, Paid Ads, …

Houston, TX - October 23, 2025 - 10x Med Spa Marketing [https://10xmedspamarketing.com/], a new performance-driven digital marketing agency founded by Martin Hristov, has officially launched to help med spas multiply their growth through data-driven SEO, website design, Google Ads, and paid social campaigns.

The agency's mission is to bridge the gap between modern med-spa marketing and measurable ROI - empowering clinic owners to attract high-value patients, increase bookings, and future-proof their…

Scottsdale Med Spa Unveils Comprehensive Injectable Treatment Services

Scottsdale Med Spa, a leading aesthetic treatment center located in the heart of Old Town Scottsdale, is proud to announce its comprehensive range of injectable treatments designed to help clients refresh, renew, and rejuvenate their appearance without surgery. Under the expert guidance of Dr. Vincent Marino, MD, and skilled injection specialist Melissa Newman, BSN, RN, CLT, the medical spa offers personalized care using the latest techniques in aesthetic medicine.

SCOTTSDALE, AZ…

Fetal Monitor Transducer market: New Prospects to Emerge by 2028 | Unimed Medica …

"

The Fetal Monitor Transducer global market is thoroughly researched in this report, noting important aspects like market competition, global and regional growth, market segmentation and market structure. The report author analysts have estimated the size of the global market in terms of value and volume using the latest research tools and techniques. The report also includes estimates for market share, revenue, production, consumption, gross profit margin, CAGR, and other key…

CAS MED SPA OFFERS COOLSCULPTING PROMOTION

MARIETTA, GEORGIA- APRIL 29, 2019- CAS MED SPA is excited to announce its CoolSculpting service, as well as a money saving promotional code. Available on their website at https://cs.cassmedspa.com/cas-coolsculpting-best-offer, patients can save $200 off their treatment. Going on through SPRING 2019, CAS MED SPA offers their best deal ever, up to $1500 with the purchase of six or more treatments!

CoolSculpting is a revolutionary way to eliminate body fat. Unlike liposuction…

EuropeSpa med quality standards published in book form

Internationally valid quality and safety criteria for health and medical wellness listed in a comprehensive compendium

Stuttgart/Wiesbaden, 3 September 2012 | The European Spas Association has published the EuropeSpa med criteria for medical spas and medical wellness providers. The book Quality Standard for Medical Spas and Medical Wellness Providers in Europe was unveiled today by publishing house Schweizerbart Verlag (www.schweizerbart.de) in Stuttgart.

For the first time, this compendium sets out about…