Press release

Z.ai Launches GLM-4.5V: Open-source Vision-Language Model Sets New Bar for Multimodal Reasoning

Z.ai (formerly Zhipu) today announced GLM-4.5V, an open-source vision-language model engineered for robust multimodal reasoning across images, video, long documents, charts, and GUI screens.Multimodal reasoning is widely viewed as a key pathway toward AGI. GLM-4.5V advances that agenda with a 100B-class architecture (106B total parameters, 12B active) that pairs high accuracy with practical latency and deployment cost. The release follows July's GLM-4.1V-9B-Thinking, which hit #1 on Hugging Face Trending and has surpassed 130,000 downloads, and scales that recipe to enterprise workloads while keeping developer ergonomics front and center. The model is accessible through multiple channels, including Hugging Face [http://huggingface.co/zai-org/GLM-4.5V], GitHub [http://github.com/zai-org/GLM-V], Z.ai API Platform [http://docs.z.ai/guides/vlm/glm-4.5v], and Z.ai Chat [http://chat.z.ai], ensuring broad developer access.

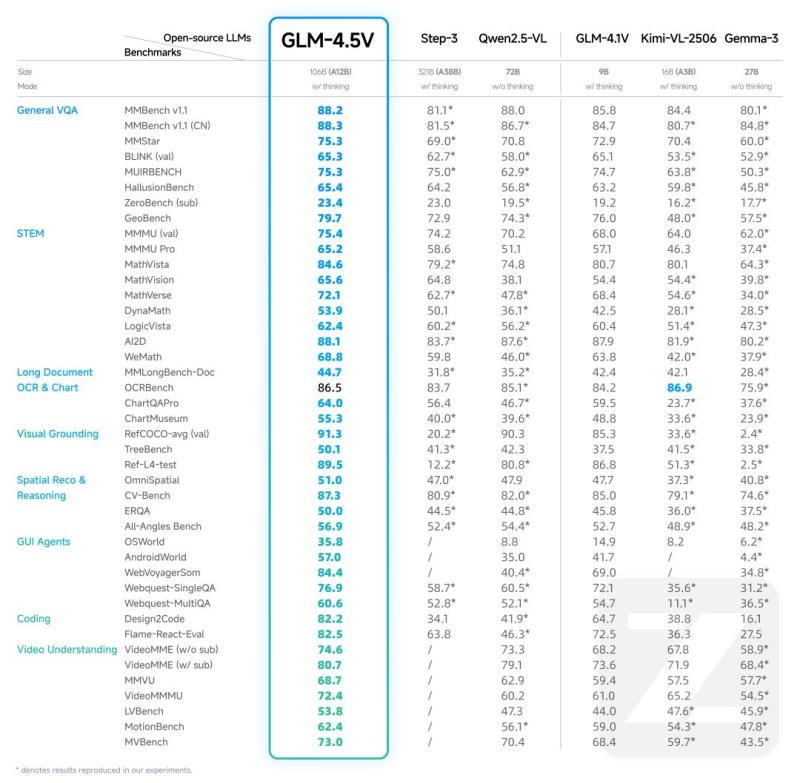

Open-Source SOTA

Built on the new GLM-4.5-Air text base and extending the GLM-4.1V-Thinking lineage, GLM-4.5V delivers SOTA performance among similarly sized open-source VLMs across 41 public multimodal evaluations. Beyond leaderboards, the model is engineered for real-world usability and reliability on noisy, high-resolution, and extreme-aspect-ratio inputs.

The result is all-scenario visual reasoning in practical pipelines: image reasoning (scene understanding, multi-image analysis, localization), video understanding (shot segmentation and event recognition), GUI tasks (screen reading, icon detection, desktop assistance), complex chart and long-document analysis (report understanding and information extraction), and precise grounding (accurate spatial localization of visual elements).

Image: https://www.globalnewslines.com/uploads/2025/08/1ca45a47819aaf6a111e702a896ee2bc.jpg

Key Capabilities

Visual grounding and localization

GLM-4.5V precisely identifies and locates target objects based on natural-language prompts and returns bounding coordinates. This enables high-value applications such as safety and quality inspection or aerial/remote-sensing analysis. Compared with conventional detectors, the model leverages broader world knowledge and stronger semantic reasoning to follow more complex localization instructions.

Users can switch to the Visual Positioning mode, upload an image and a short prompt, and get back the box and rationale. For example, ask "Point out any non-real objects in this picture." GLM-4.5V reasons about plausibility and materials, then flags the insect-like sprinkler robot (the item highlighted in red in the demo) as non-real, returning a tight bounding box a confidence score, and a brief explanation.

Image: https://www.globalnewslines.com/uploads/2025/08/8dcbdd7939f12f7a2239bfbb0528b3f7.jpg

Design-to-code from screenshots and interaction videos

The model analyzes page screenshots-and even interaction videos-to infer hierarchy, layout rules, styles, and intent, then emits faithful, runnable HTML/CSS/JavaScript. Beyond element detection, it reconstructs the underlying logic and supports region-level edit requests, enabling an iterative loop between visual input and production-ready code.

Open-world image reasoning

GLM-4.5V can infer background context from subtle visual cues without external search. Given a landscape or street photo, it can reason from vegetation, climate traces, signage, and architectural styles to estimate the shooting location and approximate coordinates.

For example, using a classic scene from Before Sunrise -"Based on the architecture and streets in the background, can you identify the specific location in Vienna where this scene was filmed?"-the model parses facade details, street furniture, and layout cues to localize the exact spot in Vienna and return coordinates and a landmark name. (See demo: https://chat.z.ai/s/39233f25-8ce5-4488-9642-e07e7c638ef6).

Image: https://www.globalnewslines.com/uploads/2025/08/f51fdc9fae815cfaf720bb07467a54db.jpg

Beyond single images, GLM-4.5V's open-world reasoning scales in competitive settings: in a global "Geo Game," it beat 99% of human players within 16 hours and climbed to rank 66 within seven days-clear evidence of robust real-world performance.

Complex document and chart understanding

The model reads documents visually-pages, figures, tables, and charts-rather than relying on brittle OCR pipelines. That end-to-end approach preserves structure and layout, improving accuracy for summarization, translation, information extraction, and commentary across long, mixed-media reports.

GUI agent foundation

Built-in screen understanding lets GLM-4.5V read interfaces, locate icons and controls, and combine the current visual state with user instructions to plan actions. Paired with agent runtimes, it supports end-to-end desktop automation and complex GUI agent tasks, providing a dependable visual backbone for agentic systems.

Built for Reasoning, Designed for Use

GLM-4.5V is built on the new GLM-4.5-Air text base and uses a modern VLM pipeline-vision encoder, MLP adapter, and LLM decoder-with 64K multimodal context, native image and video inputs, and enhanced spatial-temporal modeling so the system handles high-resolution and extreme-aspect-ratio content with stability.

The training stack follows a three-stage strategy: large-scale multimodal pretraining on interleaved text-vision data and long contexts; supervised fine-tuning with explicit chain-of-thought formats to strengthen causal and cross-modal reasoning; and reinforcement learning that combines verifiable rewards with human feedback to lift STEM, grounding, and agentic behaviors. A simple thinking / non-thinking switch allows builders trade depth for speed on demand, aligning the model with varied product latency targets.

Image: https://www.globalnewslines.com/uploads/2025/08/8c8146f0727d80970ed4f09b16f3b316.jpg

Media Contact

Company Name: Z.ai

Contact Person: Zixuan Li

Email: Send Email [http://www.universalpressrelease.com/?pr=zai-launches-glm45v-opensource-visionlanguage-model-sets-new-bar-for-multimodal-reasoning]

Country: Singapore

Website: https://chat.z.ai/

Legal Disclaimer: Information contained on this page is provided by an independent third-party content provider. GetNews makes no warranties or responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you are affiliated with this article or have any complaints or copyright issues related to this article and would like it to be removed, please contact retract@swscontact.com

This release was published on openPR.

Permanent link to this press release:

Copy

Please set a link in the press area of your homepage to this press release on openPR. openPR disclaims liability for any content contained in this release.

You can edit or delete your press release Z.ai Launches GLM-4.5V: Open-source Vision-Language Model Sets New Bar for Multimodal Reasoning here

News-ID: 4144632 • Views: …

More Releases from Getnews

New Non-Invasive Body Contouring Options Now Available at North Miami Cosmetic D …

Image: https://www.globalnewslines.com/uploads/2026/01/1769579098.jpg

"We're seeing patients who tried surgical options and faced long recovery times, scarring, and unexpected complications," said the medical director. "Our non-invasive injectables and energy-based technologies deliver real body contouring results. Patients leave the same day and return to their routines immediately, which is what most people want when weighing their options."

Del Campo Dermatology & Laser Institute in North Miami emphasizes personalized anti-aging care tailored to each patient's goals,…

Elder Care Homecare Expands Professional In-Home Support Services Across New Yor …

Image: https://www.globalnewslines.com/uploads/2026/01/1769799456.jpg

Elder Care Homecare announces continued growth and service expansion throughout New York, strengthening access to reliable, compassionate, and professional in-home care solutions for seniors and families. The organization remains committed to delivering personalized assistance designed to support independence, dignity, and overall well-being within the comfort of home.

As demand for dependable senior care continues to rise, Elder Care Homecare New York [https://eldercarehomecare.com/nyc/services/#:~:text=Our%20caregivers%20have%20received%20exceptional%20training%20and%20have%20the%20experience%20to%20support%20seniors] provides tailored care plans that address daily living…

Deer Mountain Equipment Expands Offerings in Clayton, WA

Image: https://www.globalnewslines.com/uploads/2026/01/1769782099.jpg

Deer Mountain Equipment, based in Clayton, WA, provides high-quality outdoor and industrial machinery, including construction tools, compact tractors, and heavy equipment. Known for reliable products, expert guidance, and flexible rental and sales options, the company supports professionals and hobbyists with durable solutions for a wide range of projects.

January 31, 2026 - Clayton, WA - The company continues to support professionals and hobbyists alike with a wide selection of machinery,…

Beyond the Horizon: Galleria Camaya Unveils the Poetic Abstractions of Loida Ber …

Image: https://www.globalnewslines.com/uploads/2026/01/1769739970.jpg

Galleria Camaya at the Hong Kong Visual Arts Centre

Loida Bernardo's solo show at HKVAC, presented by Galleria Camaya., features emotional abstract landscapes that celebrate Filipino National Arts Month this February in Central, Hong Kong.

This February, the Hong Kong Visual Arts Centre (HKVAC) will host a captivating solo exhibition by Filipino artist Loida "Baloi" Bernardo, organized by Gail Hills of Galleria Camaya and curated by Hong Kong-based artist Rodolfo Canete…

More Releases for GUI

OpenFOAM MATLAB GUI with CFDTool 1.2

CFDTool has been upgraded with built-in and completely seamless OpenFOAM GUI integration. This allows setting up and running, both laminar and turbulent OpenFOAM CFD simulations all within an easy and convenient GUI directly in MATLAB. With CFDTool, OpenFOAM case file generation steps such as

- automatic mesh generation, conversion, and extraction of 2D/axi-symmetric grids

- handles both constants and general expressions as boundary conditions (for example for parabolic inflow profiles)

- calculation of…

froglogic Releases Squish GUI Test Automation Tool Squish GUI Tester 5.0

Hamburg, Germany – 2013-06-25 froglogic GmbH today announced that Squish 5.0—a major new version of the popular Squish GUI Tester —is now available.

Squish GUI Tester is the market leading, functional test automation tool for cross-platform and cross-device GUI testing on desktop, embedded and mobile platforms as well as web browsers. More than 3,000 QA departments around the world benefit from its tight integration with each supported GUI technology enabling the…

Mobile GUI Test Automation: Squish Goes Android

Hamburg, Germany – 2012-06-12 froglogic announced Squish, its cross-platform automated GUI testing tool, will support automated testing for Android Apps on Android-powered devices and emulators.

The Squish GUI testing tool is the market leading tool for cross-platform GUI test automation on desktop, embedded and mobile platforms. By adding the Android Edition, Android App developers finally have the professional GUI testing tool option for software functional and regression testing.

"Squish for Android deeply…

Altia Unveils Nationwide ‘GUI Design Contest’

COLORADO SPRINGS, CO, January 12, 2011 – Altia, Inc., the leader in user interface tools, has announced a new contest that offers university students the opportunity to create an innovative user interface with the company’s award-winning software kit. Students are invited to use a trial version of Altia Design to build the most visually-appealing graphical user interface (GUI) possible. Projects will be judged by a first-rate panel of user experience…

GUI Test Automation: froglogic Releases Squish 4.1

Hamburg, Germany – 2011-07-27 froglogic GmbH today announced that Squish 4.1 - a new feature release of the Squish GUI Testing Tool - is now available.

Squish is the leading functional, cross-platform GUI and regression testing tool that can test applications based on a variety of GUI technologies, including Nokia's Qt Software Development Frameworks, Java SWT/Eclipse RCP, Java AWT/Swing, Windows MFC and .NET, Mac OS X Carbon/Cocoa, iOS CocoaTouch and Web/HTML/AJAX.…

GUI Testing Specialist froglogic Announces Eclipse Strategy

Hamburg, Germany - 2008-10-03 froglogic GmbH will announce its new Eclipse strategy at EclipseCon 2008 taking place in Santa Clara next

week. froglogic is the vendor of the leading, cross-platform automated

GUI testing tool Squish. Squish supports creating and running

automated GUI tests of applications based on a variety of user

interface technologies including Trolltech's Qt toolkit, Java

AWT/Swing/NetBeans, Java SWT/Eclipse RCP/JFaces, Web/HTML/AJAX and Mac

OS X Carbon/Cocoa.

froglogic's upcoming Squish 4.0 release will bring many

ground-breaking improvements…