Press release

Best AI Models: How to Choose Speed, Cost, and Quality

To choose the best ai model in 2026, you must look beyond brand names and evaluate three specific performance metrics: Quality (reasoning depth), Speed (throughput in TPS and latency), and Cost (token efficiency). High-reasoning models like GPT-5.2 lead in latency but may have lower uptime, while Gemini 3 Flash provides industry-leading throughput for high-volume tasks. The most efficient strategy for professional teams is to utilize an orchestration layer like https://zenmux.ai/ to implement "Model Routing," which automatically balances these metrics-such as routing logic-heavy tasks to DeepSeek-V3.2 and high-speed tasks to Gemini 3 Flash-to achieve the best possible performance at the lowest price.The AI Model Trilemma in 2026: Navigating the Intelligence Explosion

As we move through late 2026, the artificial intelligence landscape has reached a stage of "hyper-specialization." According to reports from the Stanford Institute for Human-Centered AI, the "Intelligence Explosion" has evolved into a strategic "Trilemma": developers must constantly choose between the peak reasoning of frontier models, the near-instant response of flash models, and the cost-efficiency of open-weight alternatives.

In this competitive market, "one-size-fits-all" is no longer a viable AI strategy. A coding agent might require the deep logic of GPT-5.2, while a high-volume data categorization task might be better served by the efficiency of Gemini 3 Flash. Navigating this choice requires a deep look at real-world performance metrics-latency, throughput (TPS), and reasoning benchmarks-to ensure your AI stack remains both powerful and profitable.

Defining Quality: Reasoning, Accuracy, and 2026 Benchmarks

In 2026, "Quality" is defined by a model's ability to perform complex, multi-step "Chain-of-Thought" (CoT) reasoning. Benchmarks like MMLU-Pro and HumanEval-XL are now the gold standards for measuring the cognitive depth of an AI engine.

Models like GPT-5.2 and Gemini 3 Pro occupy the high-quality tier, designed for tasks where logic and accuracy are non-negotiable. Meanwhile, DeepSeek-V3.2 (Thinking Mode) has emerged as a disruptive powerhouse for logic-heavy STEM tasks, offering recursive reasoning that rivals models three times its size.

GPT-5.2 is designed with a strong focus on autonomous agency and uses advanced chain-of-thought reasoning. It is best suited for strategic planning, legal analysis, and building complex agent-based systems where long-term decision-making and structured reasoning are required. Gemini 3 Pro emphasizes multimodal logic with native multimodal reasoning capabilities, making it ideal for large-scale data synthesis and advanced video or audio reasoning tasks that require understanding across multiple input formats. DeepSeek-V3.2 (Thinking) prioritizes logical deduction through recursive reasoning, excelling in mathematics, deep debugging, and complex STEM-related tasks that demand precise, step-by-step problem solving.

Quality in the modern AI era is measured by the model's 'reasoning density'-its ability to maintain logical consistency across massive prompts and complex constraints. For enterprise-grade applications, the higher cost of these models is often justified by the reduction in human oversight and error correction.

Calculating Speed: Real-Time Throughput and Latency Performance

Speed is the most visible metric for the end-user. In the AI industry, speed is split into two categories: Latency (Time to First Token) and Throughput (Tokens Per Second - TPS). Based on the latest data from the ZenMux provider monitoring system, we can see a clear distinction between "Frontier Reasoning" and "High-Throughput Flash" models.

Understanding these metrics is vital: high throughput allows for faster content generation, while low latency ensures the model "reacts" instantly to user input.

Gemini 3 Flash (via ZenMux) delivers the highest throughput at 119.16 tokens per second, with a latency of 2.48 seconds and an uptime of 98.51%, earning its reputation as the high-throughput king for workloads that demand speed at scale. GPT-5.2 operates at 40.46 tokens per second but stands out with an ultra-low latency of just 0.45 seconds, although its uptime is 65.54%, making it best suited for scenarios where fast response times and advanced reasoning are the top priorities. MiMo-V2-Flash offers a balanced efficiency profile, providing 38.43 tokens per second, 2.33 seconds of latency, and 97.14% uptime, which makes it a reliable middle-ground option for consistent performance. DeepSeek-V3.2 (Thinking) prioritizes reliability and logic, running at 28.48 tokens per second with 2.16 seconds latency and a perfect 100% uptime, making it ideal for mission-critical reasoning tasks that demand maximum stability.

Throughput and uptime are the twin pillars of user experience; for real-time interaction, achieving a consistent token flow of over 100 TPS is a breakthrough for data-heavy workflows.As shown in the data, GPT-5.2 offers the fastest initial reaction (0.45s), but Gemini 3 Flash is far superior for high-volume throughput (119.16 tps). Additionally, notice the uptime disparity: DeepSeek maintains a perfect 100% reliability, whereas OpenAI's GPT-5.2 provider currently experiences significant volatility (65.54% uptime).

The Cost-Efficiency Matrix: Maximizing ROI via Model Routing

Managing token costs is the final piece of the selection puzzle. To solve the fragmentation of the AI market, professional teams utilize ZenMux, which offers a unified gateway to 90+ LLM models. ZenMux allows you to move away from a single-provider "lock-in" and instead build a flexible AI stack that scales with your budget.

According to the ZenMux Introduction, the platform serves as a vital orchestration layer: ZenMux provides a unified interface to interact with a wide range of Large Language Models (LLMs) from different providers.By consolidating these providers, ZenMux enables a powerful cost-saving feature: Model Routing.

Strategic Optimization with ZenMux Model Routing

Model Routing is an intelligent feature of ZenMux that removes the need for manual model selection. Instead of hard-coding a specific model, the system evaluates the incoming request and directs it to the best-suited engine.

The system intelligently balances performance and cost based on the request content, task characteristics, and your preference settings.For example, by analyzing the live performance data above, ZenMux can optimize your workflow:

1. Simple Classification: A user asks to "label this feedback." ZenMux routes this to Gemini 3 Flash for its 119 TPS throughput.

2. Logic-Heavy Tasks: A complex math prompt is routed to DeepSeek-V3.2 (Thinking), benefiting from its perfect 100% uptime and logical precision.

3. High-Risk Situations: If GPT-5.2 is down (given its current 65.54% uptime), ZenMux automatically switches to a high-quality fallback like Gemini 3 Pro to maintain service continuity.

This dynamic orchestration allows businesses to maintain high quality when needed while utilizing the cost-efficiency of models like MiMo-V2-Flash for routine tasks, ensuring the highest possible ROI on every token spent.

Decision Cheat Sheet: Matching Tasks to the Right Model Type

To simplify your selection process in the current 2026 landscape, use this data-driven cheat sheet:

● Priority - Quality & Immediate Reaction: Choose GPT-5.2. At 0.45s latency, it is the most responsive reasoning model available, though it requires a fallback strategy due to provider stability.

● Priority - Raw Speed & Volume (TPS): Choose Gemini 3 Flash. With 119.16 tps, it is the best tool for high-speed data extraction and real-time processing.

● Priority - Reliability & Logic: Choose DeepSeek-V3.2 (Thinking Mode). Its 100% uptime and dedicated reasoning mode make it the most dependable choice for STEM and technical tasks.

● Priority - Balanced Efficiency: Choose Xiaomi MiMo-V2-Flash. It provides steady performance (38.43 tps) and high reliability (97.14%) for general conversational workloads.

● Total Scalability: Deploy via ZenMux.ai to access 90+ models and use Model Routing to automate your speed, cost, and quality balance in real-time.

Empowering Your AI Selection with Data-Driven Agility

The "best" AI model is not a fixed title; it is a choice that must be updated as providers and performance data fluctuate. In late 2026, the hallmark of a mature AI strategy is the ability to pivot between the ultra-responsive GPT-5.2, the high-throughput Gemini 3 Flash, and the reliable DeepSeek-V3.2. By relying on real-time metrics-such as the 119.16 tps and 100% uptime tracked by the ZenMux platform-teams can move beyond marketing hype.

Building your application on a unified platform like ZenMux provides the agility required to survive the "Intelligence Explosion." With access to over 90+ models and advanced Model Routing, you can ensure your projects always achieve the perfect balance of speed, cost, and quality. Start optimizing your AI stack today by following the ZenMux Quickstart Guide and ensure your business is ready for the next evolution of AI.

Media Details:

Azitfirm

7 Westferry Circus,E14 4HD,

London,United Kingdom

--------------------

About Us:

AZitfirm is a dynamic digital marketing development company committed to helping businesses thrive in the digital world.

This release was published on openPR.

Permanent link to this press release:

Copy

Please set a link in the press area of your homepage to this press release on openPR. openPR disclaims liability for any content contained in this release.

You can edit or delete your press release Best AI Models: How to Choose Speed, Cost, and Quality here

News-ID: 4347002 • Views: …

More Releases from IQnewswire

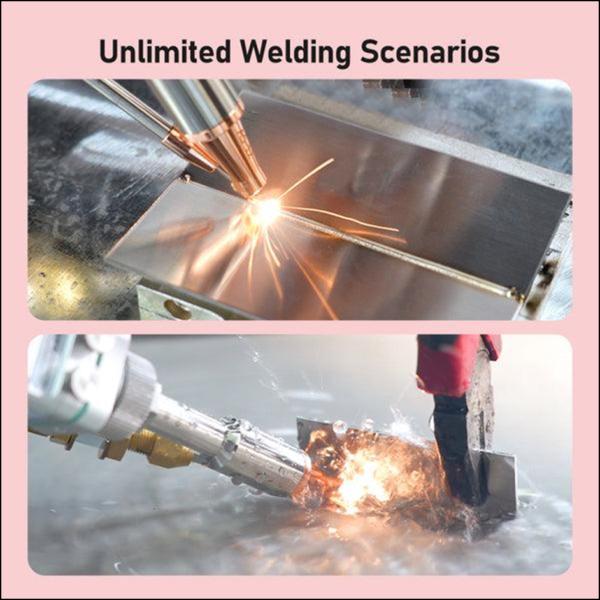

How Much Does a Laser Welding Machine Cost? A Practical Guide to Price, Cost, an …

When choosing a laser welding machine, many buyers face the same question:

Should the decision be based on laser welding machine price, or on the real laser welding machine cost?

In real purchasing conversations, most buyers usually ask the following questions:

● How much does a laser welding machine cost?

● Why is there such a big price difference between different machines?

● Which type of laser welding machine is suitable for my production?

This article is written based…

How Smart Visual Content Is Reshaping Modern Press Releases and PR Campaigns

In the crowded world of digital PR, getting noticed is no longer just about writing a solid press release. Editors, journalists, and readers are overwhelmed with information every single day. What cuts through the noise now is presentation-how a story looks, how quickly its value is understood, and how easily it can be shared across platforms. This shift is especially visible on press release distribution platforms like OpenPR, where thousands…

A Practical "Creative Factory" for Ads: Using VideoAny to Scale Variants Without …

If you need clips from scripts, concepts, or product benefits, use the AI Video Generator as the main workflow: https://videoany.io/. If you already have approved product images and want motion variations for testing, use Image To Video to generate fast, consistent creative: https://videoany.io/image-to-video.

1) The core problem in ads: speed vs. consistency

Ad teams typically face a trade-off:

● - Fast variants often look inconsistent, off-brand, or noisy.

● - High-quality production is consistent but slow…

Why CompTIA Security+ Is Your Ticket to a High-Paying Cybersecurity Career in 20 …

Over one million professionals worldwide now hold the CompTIA Security+ certification, making it a cornerstone for anyone entering cybersecurity roles in both American and international organizations. With cyber threats growing in complexity, employers demand verified skills that go beyond surface-level knowledge. This guide explains what makes CompTIA Security+ a critical credential, the expertise it measures, and how it empowers cybersecurity professionals to access advanced opportunities in a rapidly evolving industry.

Key…